With eBay remaining a core data source for cross-border sellers in areas like product selection, pricing, and competitor monitoring, more teams are starting to scrape data from eBay for purposes like dynamic pricing, identifying reselling opportunities, category trend analysis, supply chain price comparisons, and seasonal fluctuations. However, a common issue arises: the code works fine, but data cannot be fetched, or accounts get banned after a few days. To consistently collect eBay product data, the key is to create a collection environment that closely resembles a real buyer’s behavior.

I. What Data is Needed for eBay Price Analysis?

1.Core Product Data

- Product ID: Unique identifier, deduplication baseline

- Title: Keyword mining, category mapping

- Current Price: Real-time price comparison, adjustment basis

- Strikethrough Price: Discount intensity analysis

- Shipping Cost: Final price calculation

- Product Condition: New/Used, price comparison premise

2.Sales and Seller Data

- Monthly Sales/History: Price-sales elasticity analysis

- Seller ID: Tracking competitor pricing strategies

- Seller Rating: Impact of reputation on pricing premium

- Store Type: Business vs. individual seller

3.Promotions and Price Derivatives

- Coupons: Real transaction price restoration

- Bulk Discounts: Bulk purchase scenarios

- Price History Trends: Dynamic pricing decisions

- Out of Stock/End of Listing: Reselling opportunity window

4.Auxiliary Analysis Data

- Listing Time: New product identification

- Category Path: Price band distribution

- Product Attributes: Basis for comparing identical products

- Note: Data collection difficulty varies. Product detail pages, historical sales, and logged-in fields are high risk for account bans, while search result pages and public category pages are more lenient.

II. Top 3 Tools for Scraping eBay Product Price Data

Method 1: Official Tools

1.eBay API

- Features: Zero ban risk, structured data

- Can Fetch: Product ID, title, current price, shipping cost, seller name, listing time

- Cannot Fetch: Historical sales, price trends, coupons, out of stock status

- Best for: Brand sellers, compliance priority, businesses with budget

2.eBay Seller Hub

- Features: Built-in market analysis tools, no technical skills required

- Can Fetch: Category average price, hot selling price ranges, historical transaction trends

- Cannot Fetch: Cannot export raw data, cannot track specific competitors

- Best for: Individual sellers quickly understanding market prices

Switching between API for compliance and Seller Hub for convenience has limitations in field access, lack of customization, and superficial competitor insights. If your business needs to focus on specific competitors, analyze historical price fluctuations, and capture real transaction prices after coupons, these official tools may not meet your needs.

Method 2: Third-Party Scraping Software

If you don’t need to scrape tens of thousands of records daily and lack development resources, third-party tools provide the quickest way to collect data.

1.Browser Extensions (e.g., Instant Data Scraper)

- Operation: Highlight the price area and export to CSV with one click

- Advantages: 5-minute setup, no code

- Drawbacks: Can freeze after 10 pages, no login-based scraping

- Best for: Temporary price comparison, small-scale research (≤20 SKUs)

2.Professional Scraping Platforms (e.g., WebScraper)

- Operation: Visual workflow to schedule scraping on cloud servers

- Advantages: Supports login, scheduled tasks, no server maintenance

- Drawbacks: Monthly fee of $200–$1000, costs increase with scale

- Best for: Small to medium-sized sellers monitoring 20–100 competitors without development resources

For scraping under 500 SKUs, third-party tools are efficient. For more than 500 SKUs, the cost increases, maintenance becomes harder, and response times slow—this is when code-based solutions are necessary.

Method 3: Code-Based Crawlers

When you need to monitor thousands of SKUs daily, collect fields unavailable through APIs, or build your own historical price database, code crawlers are highly effective.

1.Python + Requests + Beautiful Soup

- Logic: Send requests to retrieve HTML → Parse and extract prices → Store data

- Advantages: High flexibility, can collect API unavailable fields

- Drawbacks: Requests-based connections have very low success rates in 2026

- Best for: Technical validation, temporary tasks, small-scale scraping with high-quality proxies

2.Python + Playwright/Selenium

- Logic: Browser automation, simulate real user behavior

- Advantages: Bypass TLS fingerprinting detection, stable login state

- Drawbacks: Low performance, single machine struggles with >5000 records/day

- Best for: Medium-scale scraping requiring login states and complex interactions

3.Java + Jsoup + HttpClient

- Logic: Connection pooling, multithreading, proxy middleware

- Advantages: Memory control, stable 24/7 operation

- Best for: Scraping tens of thousands of SKUs daily, large data collection platforms

III. Why is eBay Data Collection Difficult?

Many beginners assume that the challenge of scraping eBay data lies in writing code, but they soon realize that even if the code is perfect, they can’t collect data or their accounts are banned after a few days. This issue isn’t technical—it’s related to eBay’s anti-bot mechanisms.

1.IP Layer

Data center IPs have short lifespans, eBay recognizes cloud IP ranges

Shared IPs result in entire IP segments being flagged

IP location changes frequently, not consistent with account registration or login locations

2.Request Layer

Requests per IP exceed threshold

No randomization in request intervals, presenting a fixed pattern

Only data interfaces are requested, no page resources loaded

Shallow page access, only staying on product detail pages

3.Fingerprint Layer

Browser fingerprint not altered, identified as the same device

Headless browsers expose automation features

WebRTC not disabled, real IP might leak even under proxy

4.Login Layer

New accounts scraped at high frequencies without warming up, leading to abnormal behavior

Scraping accounts share IPs with main store accounts, risking association

Multiple scraping accounts log in to the same IP, creating batch operation risks

5.Maintenance Layer

No log monitoring, no awareness when accounts are banned

No error handling, captcha causes crashes

Scraping strategy not updated, unable to adapt to eBay’s evolving anti-scraping measures

IV. How to Successfully Build an eBay Data Scraping System?

In a data scraping system, what truly determines how long the collection can run isn’t just the quality of the code—it’s the proxy pool and request behavior control. As the scraping task moves into long-term monitoring, bottlenecks often occur in the following areas:

- Is the IP truly from a residential source?

- Does the IP’s country match the collection target?

- Can the system detect when an IP is banned and replace it?

- Does the proxy service allow long-term, medium-high frequency data collection?

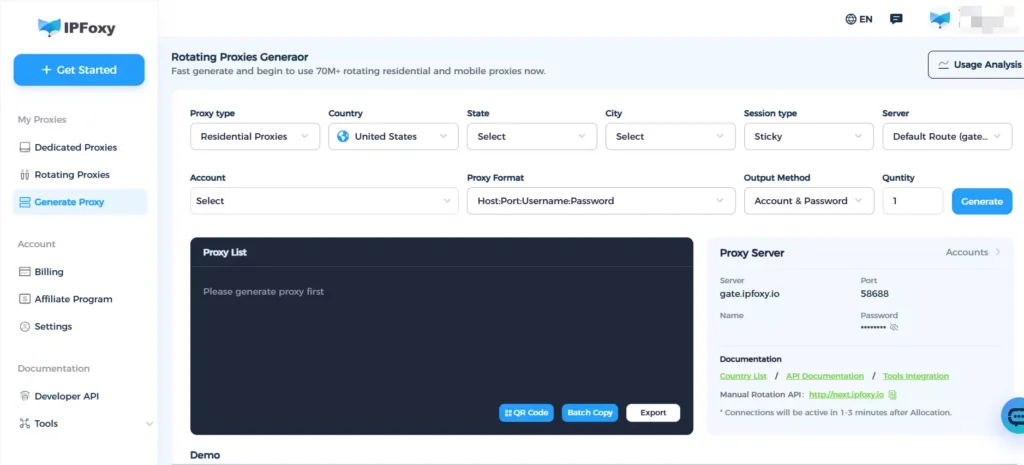

Many proxies will have drastically reduced success rates once continuous requests begin. For eBay’s long-term price monitoring, choosing a professional proxy service with compliant IP acquisition is crucial for maintaining a low IP repetition rate. Below is the performance test of IPFoxy in data collection scenarios:

- Over 90 million IPs, low IP repetition rate, covering 200+ regions globally

- Supports sticky sessions and two rotation modes, with sticky duration exceeding 30 minutes

- Provides API-level scheduling control, compatible with automated collection architectures

V. FAQ

eBay explicitly prohibits unauthorized automation in its robots.txt file, but public data scraping exists in a legal gray area. Avoid these three red lines:

Do not put pressure on the servers

Do not scrape non-public data (e.g., buyer privacy)

Do not engage in malicious reselling, infringement, or fraud

There is no absolute safe number, but here are some thresholds:

Single IP + Single Fingerprint: ≤3000 requests/day

Single IP + Single Fingerprint + Login State: ≤1000 requests/day

Single Account: ≤500 detail page requests/day

Exceeding these thresholds increases the risk of being banned.

Yes. Scraping accounts and main accounts should have isolation on IP, device, fingerprint, and payment methods.

VI. Conclusion

For eBay operations, choosing the right scraping tools and technology is crucial, but anti-bot mechanisms and account bans often pose challenges. To scrape data consistently, you must simulate real user behavior, use high-quality proxy pools, control requests carefully, and manage browser fingerprints. With these strategies, you can efficiently collect valuable data to support business decisions.