Google Maps has become a crucial source for various training scenarios. However, Google’s anti-scraping mechanisms make it difficult to efficiently collect data, especially on a large scale. To ensure uninterrupted data collection and avoid getting banned, using the right dynamic IP proxies is essential. This article delves into how to efficiently scrape Google Maps data and introduces the latest dynamic IP strategies for 2026, helping you avoid bans while ensuring high-efficiency data collection.

I. Commercial Use Cases for Google Data Scraping

In the data services market, Google data is seen as a core “production material.” Many B2B companies rely on scraping Google’s global geographical, search, and business review data to create standardized SaaS products or industry solutions, including:

1.Global Travel and Personalized Itinerary Planning Projects

Apps like AI travel assistants and customized itinerary apps scrape data such as point-of-interest ratings, business hours, real-time foot traffic, and user reviews from Google Maps and Google Search to offer minute-level updates on smart route planning or generate tailored plans based on preferences like “fewer people, higher ratings, nearby vegetarian options.”

2.Logistics and Supply Chain Geospatial Services

Scraping geographic data, traffic history, and even street-view building locations from Google Maps optimizes “last mile” delivery routes, reduces transportation costs, and sells these high-precision path planning capabilities as APIs to e-commerce platforms.

3.AI Tools and Knowledge Base Enhancement Services

Scraping real-time search results (SERP) and Google News provides external knowledge sources for large language models (LLMs), helping solve the issue of delayed model training data.

4.E-commerce and Price Monitoring Tools

Mass scraping of Google Shopping to track product pricing and ad rankings across global regions gives companies real-time competitive pricing insights and traffic distribution strategies, maintaining competitiveness in complex international markets.

II. The Role of Dynamic IPs in Large-Scale Google Maps Data Scraping

Dynamic IPs are essential to bypassing Google Maps’ anti-scraping mechanisms. Without them, large-scale scraping becomes nearly impossible.

- Avoiding IP Blocks

Google monitors and limits frequent requests from the same IP. Dynamic IPs help avoid IP bans from frequent requests when collecting delivery paths and selecting optimal routes, ensuring continuous data collection. - Simulating Real Users

Concentrated, regular traffic is easily identified as bot behavior. Using dynamic residential IPs from different regions and carriers helps collect localized business data and lowers the risk of getting blocked by Google. - Accessing Regional Content

Dynamic IPs allow you to retrieve region-specific content. Different areas may show varying businesses, hotels, and attractions on Google Maps. Dynamic IPs, especially residential ones with city-level precision, enable accurate local data collection globally, aiding consumer decision-making. - Improving Data Collection Efficiency

Request speed from a single IP is limited, and rapid requests can trigger Google Maps’ anti-scraping mechanisms. Dynamic IPs help improve scraping efficiency while avoiding IP blocks from fast requests. Using a dynamic IP pool enables large-scale Google Maps data scraping in high-concurrency environments, supporting seamless interaction between virtual and real worlds in AR applications.

Note: Even with dynamic IPs, it’s important to comply with Google’s terms of service. The technology is designed to enhance efficiency and stability, not for unlimited abuse. For critical tasks, it’s recommended to prioritize using Google Maps’ official API.

III. Choosing the Right Dynamic IP Service for Google Maps Scraping

Google Maps’ anti-scraping mechanism is highly sensitive, and the data returned has strict requirements. Therefore, IP service selection must be deeply customized for the scenario.

- Real and Precise Residential IPs

Google’s risk control system can easily identify and block data center IPs. Using residential IPs sourced from real home broadband is the most effective way to avoid detection and simulate real user behavior.

- Real Residential Exit: The IP’s ASN (Autonomous System Number) clearly belongs to a mainstream residential network operator, not a data center.

- Precise Geolocation: Offers city/state-level IP location, ensuring accurate data retrieval.

- High Purity: The IP hasn’t been extensively flagged or abused by Google.

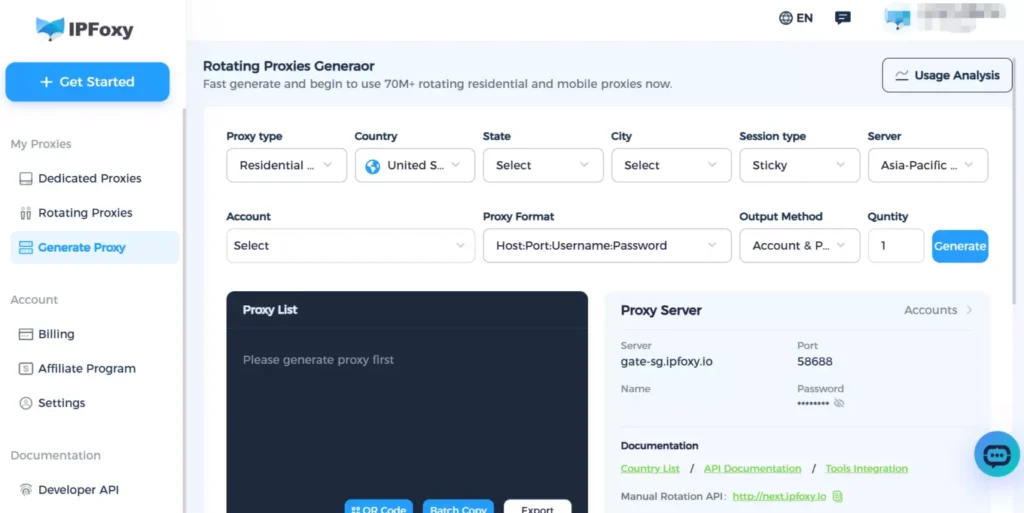

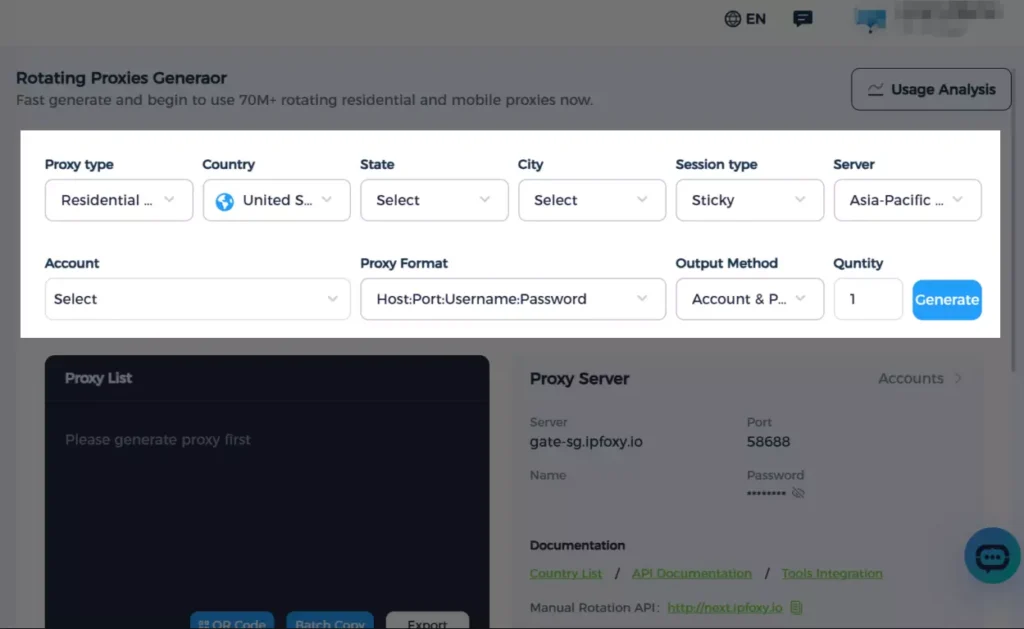

For large-scale data scraping from Google Maps, IPFoxy offers real residential IPs sourced from home broadband networks. They provide more stability and better ability to simulate real user behavior. With access to over 200 global regions, the service allows for flexible data collection in various locations with features like request rotation, sticky sessions, and API/port switching.

- Supporting High Frequency and Continuous Monitoring

Large-scale data collection requires high-frequency requests, while long-term monitoring of specific locations needs session stability.

- Request Rotation: Suitable for large-scale, one-time POI (point of interest) list collection, rotating IPs per request to minimize risk.

- Sticky Sessions: Useful for monitoring specific business ratings, reviews, or multi-step searches, maintaining the same IP for a set period to avoid task interruption.

- Low Latency and High Stability

Google Maps pages are complex, and data collection tasks usually require high concurrency to improve efficiency.

- Speed: Latency should be under 1 second to avoid slowing down the overall collection process.

- Stability: The interruption rate should be extremely low, supporting long, high-volume requests.

- Concurrency: Supports over 50 concurrent threads per account, ensuring scalability for large-scale data collection.

- Transparent Billing and Immediate Technical Support

During the scraping process, issues like IP failures and API changes may arise, so choosing an IP service with transparent billing and robust technical support is crucial.

- Transparent Billing: Prefer a pay-per-usage model, avoiding hidden throttling fees.

- Technical Support: Fast online response and clear API documentation help resolve issues during IP pool fluctuations or scraping rule changes.

IV. FAQ

A: No. Google Maps can precisely identify and block data center IP ranges. Using data center proxies makes it easy to get blocked, causing immediate interruptions in your scraping tasks. Real residential IPs come from home networks and have behavior patterns identical to those of real users, making them effective for bypassing Google’s advanced anti-scraping systems.

A: Google Maps determines the city/region based on the user’s IP and returns differentiated local results. If your proxy IP is located in “USA” instead of “New York City,” you might get nationwide results instead of the local New York listings.

Always choose a residential proxy service that supports city-level (or even postal code-level) precise geolocation. Verify the specific city of the IP before starting data collection to ensure geographic accuracy.

A: This indicates that your scraping strategy needs immediate adjustment. Even high-quality residential IPs can trigger Google’s protections if the request frequency is too fast (e.g., several requests per second).

Reduce Frequency: Increase the random request interval (e.g., 2-5 seconds) to simulate human browsing behavior.

Check Rotation Settings: Ensure that “request rotation” is enabled or reduce the session time for “sticky sessions.”

Verify IP Quality: Check if the current IP pool’s quality has decreased.

Use Backup: Switch to a different IP pool or service as a backup.

V. Conclusion

By selecting the right dynamic IP proxy and applying best practices for Google Maps data scraping, you can effectively bypass Google’s anti-scraping mechanisms and ensure stable, efficient data collection. Never overlook the importance of IP quality and ensure the appropriate proxy service is configured based on your specific needs. Continuously monitor the accuracy and stability of proxy services to guarantee that your collected data meets expectations. With the right IP proxy strategy, Google Maps data collection will become much smoother.